From the Swiss cheese model to the Safety pyramid

Megha Karkera Kanjia MD and Lynn Martin MD MBA

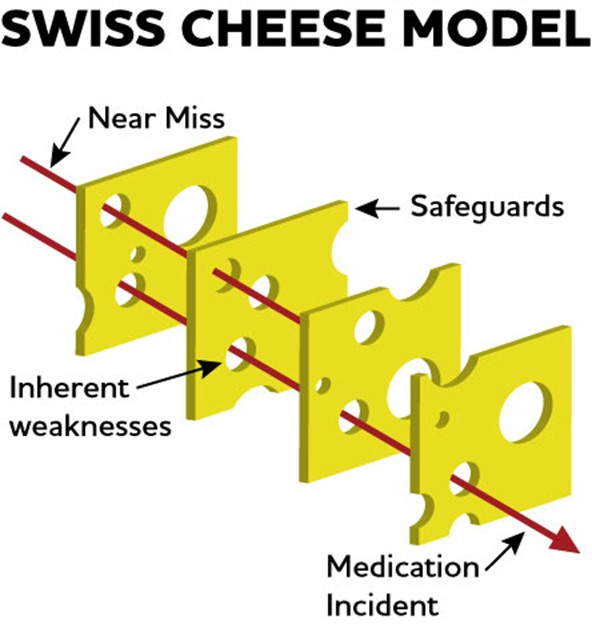

Today’s PAAD comes from a unique inspiration, an obituary from the NY Times.1 A pioneer in human error, Dr. Reason was a professor of psychology at the University of Leicester. The inspiration for his entire career focus, as told in a humorous story told by Mr. Rosenwald, occurred when his cat Rusky cause a distraction that led to a mistake (using cat food to make tea). Pondering over his mistake led to a complete shift in his career focus and ultimately to the very familiar Swiss cheese model of human error. He explained that “all defenses have holes in them and every now and again the holes line up so that there can be some trajectory of accident opportunity.” The holes develop in one of two categories: active failures (mistakes typically made by people) and latent conditions (mistakes in system design like instructions, construction etc.). Nearly all organization accidents involve a complex interaction between these two sets of factors.

Professor Reason subsequently used this model to organize and study human errors prevention in multiple different setting, aviation, nuclear industry, healthcare and others. His metaphor for analyzing and preventing accidents envisions multiple vulnerabilities in safety measures (holes int eh cheese) that can align to create a recipe for tragedy (patient harm in healthcare). There have been some that questioned the validity of Reason’s widely known model of error prevention and safety.2 Thus, I have asked Megha Karkera Kanjia, Associate Professor, Director of Quality Improvement, Pediatric Anesthesiology, Perioperative and Pain Medicine Texas Children's Hospital and one of our PAAD pediatric anesthesiology experts in patient safety, to use this as a springboard for a brief review of other more recent models of error prevention and safety. Lynn Martin MD MBA

Original article

Rosenwald MS. James Reason, Who Used Swiss Cheese to Explain Human Error, Dies at 86. New York Times. https://www.nytimes.com/2025/03/13/science/james-reason-dead.html

While Professor Reason’s theory on safety is a foundational piece within the Safety pyramid, many safety experts believe that there are some critically missing pieces that were built upon this Safety-1 theory. In the current VUCA (Volatile, Uncertain, Complex, and Ambiguous) healthcare climate, many safety leaders have appreciated that Professor Reason’s linear thought approach to safety no longer stands as well grounded as before. The complexity of the healthcare systems that we work in are significantly more multi-factorial and dimensional in a way that is better expressed through concepts of Safety-2 and Safety-3. (3, 5) The image on the cover of Sydney Dekker’s Drift Into Failure portrays multiple mini tornadoes moving from various directions, swirling into a drain; this is vastly different from the linear concept of latent and active failures conceptualized in the Swiss Cheese Model.

The concepts of Safety-2 and an attempt to understand how frontline workers actually perform everyday work through the layers of challenges explain how non-linear and complex the work is in reality. The workers are adaptable, which is why they are able to function in a system where the medication shortages, complex patients, and challenging needs of our overburdened system are opportunities for a failure to take place. Another interesting aspect of Safety-2 is that success of a system can also hide its opportunity for failure. The best example I can think of is that since 1963 when Dr. Enders and his team at Boston Children’s Hospital came out with the measles vaccine, the disease that plagued many children and adults was nearly eradicated and created the success of herd immunity due to the overwhelming percentage of vaccinated children. This herd immunity truly highlights the success of the vaccine, which has since unfortuntately changed. The disease that many of us thought we would never see in our lifetime has made a resurgence—all because the success of the vaccine has hidden the failure of not being immunized. Our country is facing something that was nearly eradicated after over 60 years.

The interesting thing about Safety-2 is that the focus is less on the failures, and more on the successes. Why are teams and workers successful in scenarios that are constantly adapting and changing with more complex pediatric growing into adult patients while anesthesiologist are managing being a board runner and modifying medication administration based on these medication shortages? The “decrementalism” identified by Sydney Dekker claims that the small stepwise changes over time causes the failure of a system, as opposed to the major “one off” type latent failure as identified in the Swiss Cheese Model. The push with this Safety-2 model is to empower workers to discuss the concept of “drift” as it is occurring in everyday work, before it leads to failures.(3) Regarding the ‘New View’ of safety, Safety scientist Todd Conklin says, “Safety is not the absence of errors, but the presence of capacity.” (4)

The truth is that each component of the Safety pyramid is built upon the previous foundation, which will result in a more comprehensive view when looking at it holistically. The subsequent concept of Safety- 3 is one that highlights an opportunity to design all systems with the front line worker in mind, ensuring that the input and needs of the worker are kept at the forefront rather than being an afterthought. This concept described by Dr. Nancy Leveson is one that organizations and teams should strive for. (5) This begs the question: why do we start by designing a system, and then put it into place before considering the front line workers needs and input?

Send your thoughts and comments to Myron who will post in a Friday reader response.

References

1. Rosenwald MS. James Reason, Who Used Swiss Cheese to Explain Human Error, Dies at 86. New York Times. https://www.nytimes.com/2025/03/13/science/james-reason-dead.html (accessed March 19, 2025).

2. Perneger TV. The Swiss cheese model of safety incidents: are there holes in the metaphor? BMC Health Serv Res. 2005 Nov 9;5:71. doi: 10.1186/1472-6963-5-71. PMID 16280077.

3. Dekker, S. (2011). Drift into Failure: From Hunting Broken Components to Understanding Complex Systems (1st ed.). CRC Press. https://doi.org/10.1201/9781315257396

4. Conklin, T. (2012). Pre-Accident Investigations: An Introduction to Organizational Safety (1st ed.). CRC Press. https://doi.org/10.4324/9781315246178

5. Leveson, Nancy. "Safety III: A systems approach to safety and resilience." MIT Engineering Systems Lab: doi: sunnyday. mit. edu/safety-3. Pdf.2024 text also